Overview

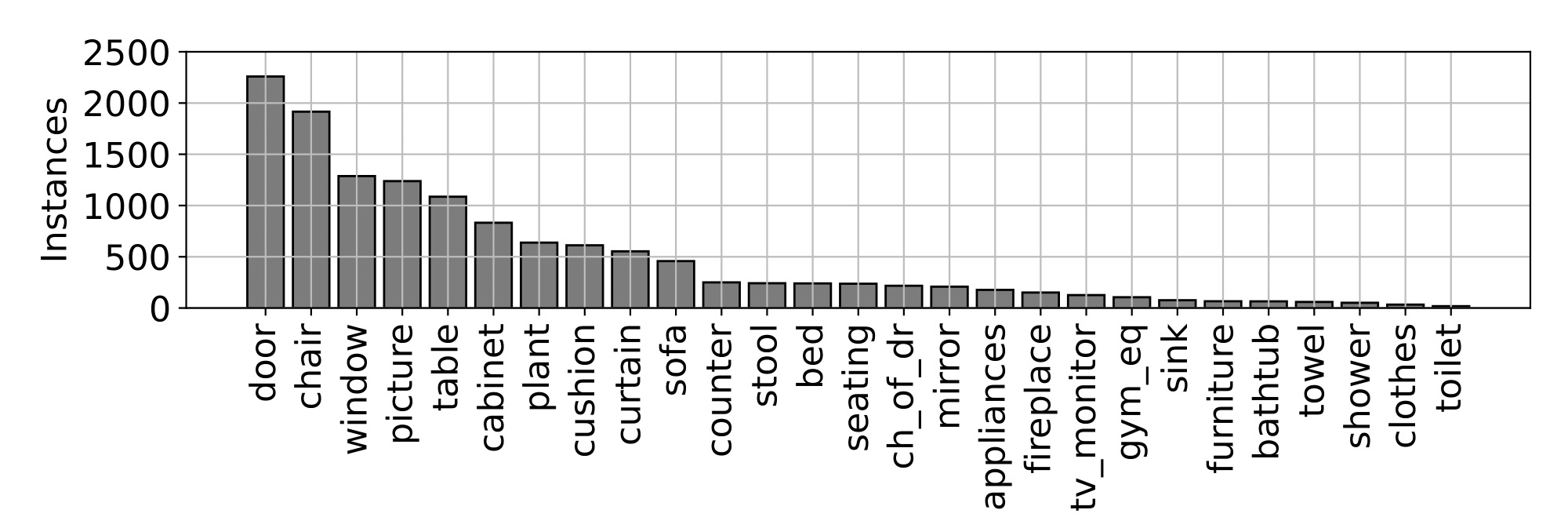

This is a dataset to evaluate active recognition agent in indoor environments. A total of 13,200 testing samples with 27 common object categories are contained in this dataset.

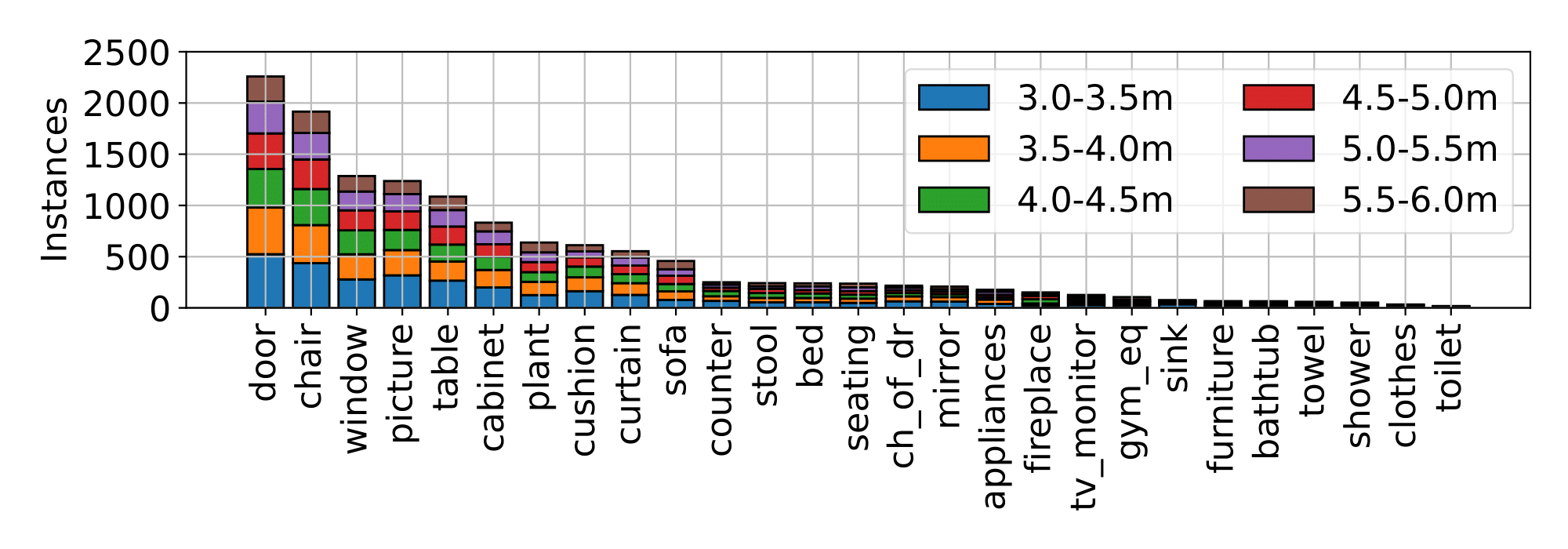

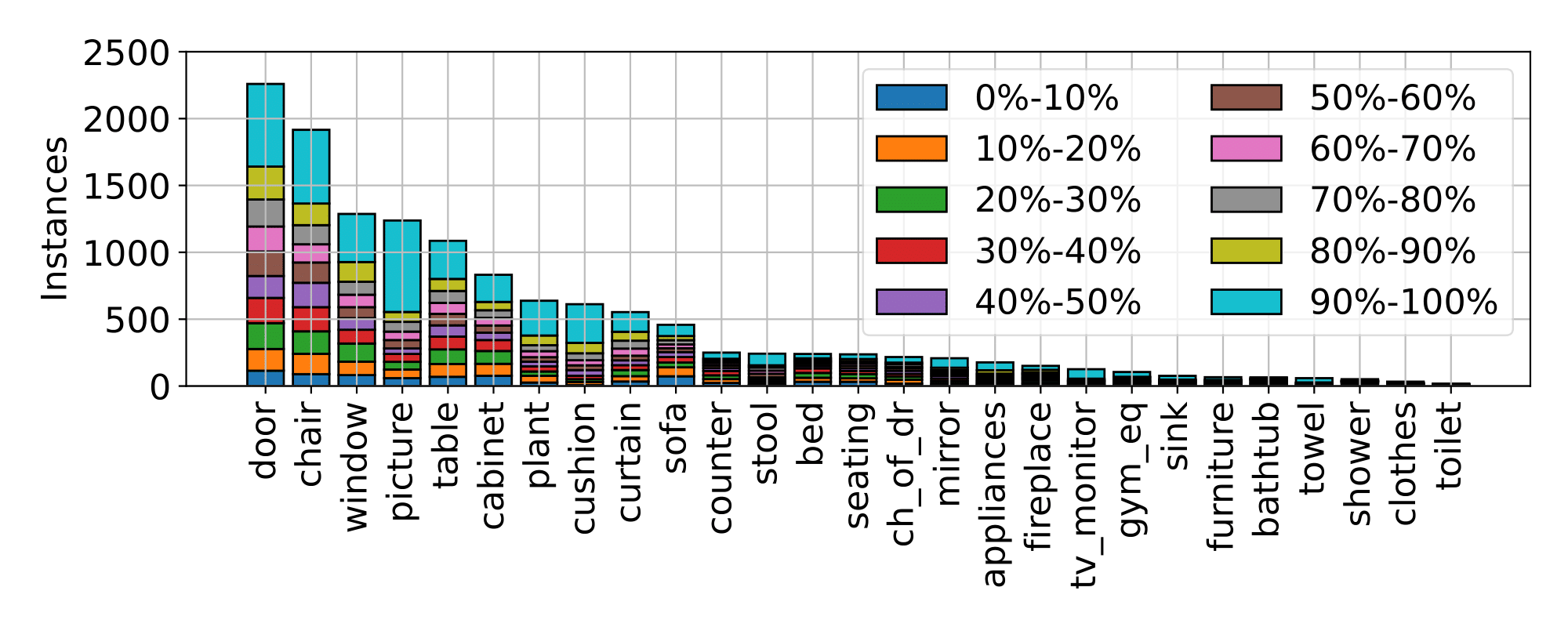

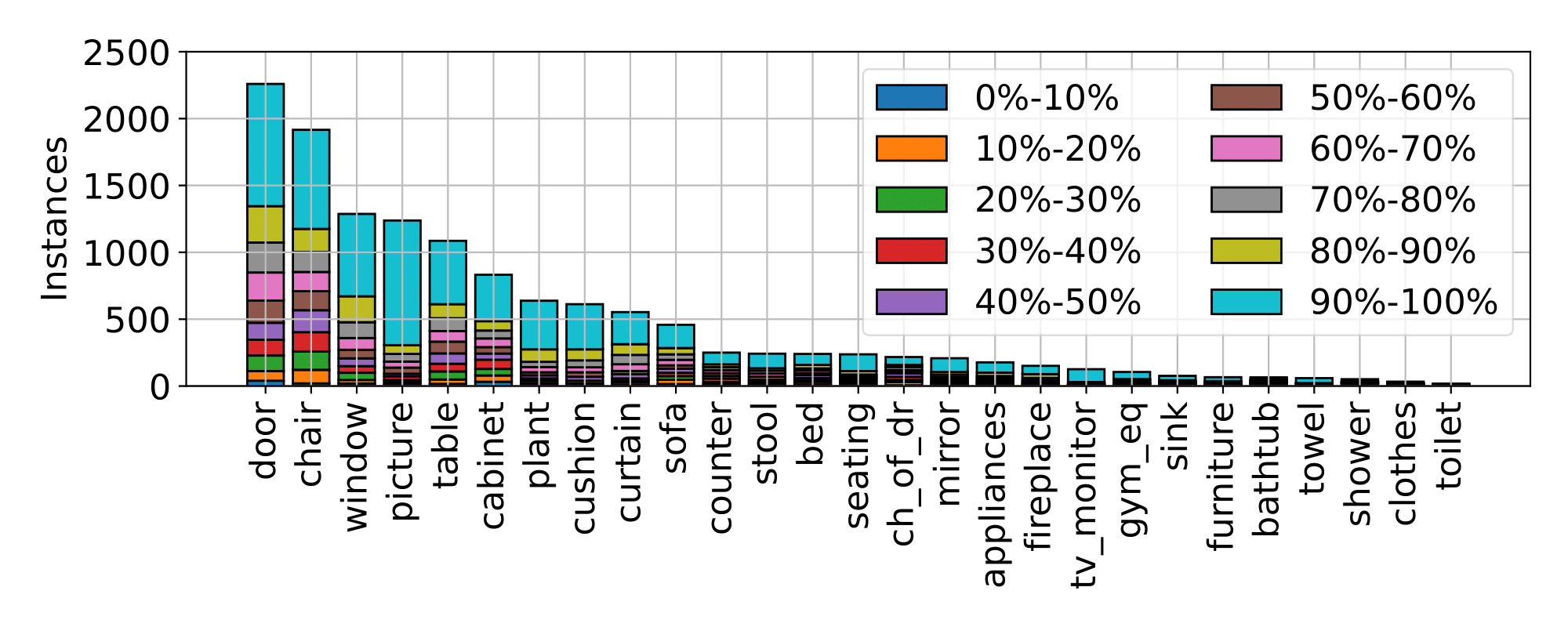

Moreover, to show the advantage of active recognition in handling recognition challenges by intelligently moving, we assign a recognition difficulty level to each testing sample, considering visibility, distance and observed pixels.

Two testing examples from the proposed dataset.

In the above figure, the target object is covered by a green mask, allowing for box, point or mask queries during testing. The targets are respectively occluded by the wall and the bed, thus visibility is calculated as the ratio of observed pixels to total pixels belonging to the target. The agent is then allowed to freely move in the scene to avoid negative recognition conditions and achieve better recognition performance.