Greetings from Bellevue.

I am currently an Applied Scientist with Amazon Alexa AI team. My current focus includes video QA, MLLM post-training and embodied perception.

Previously, I was a Ph.D. candidate at Northwestern University under the supervision of Prof. Ying Wu. My research interests lies in the intersection of computer vision and robotics, with a particular emphasis on active vision (the agent is endowed with the ability to move and perceive). I am constantly investigating the challenges inherent to active vision agents in an open-world context. These challenges include, but are not limited to, continual learning, few-sample learning, uncertainty quantification and vision-language models.

Prior to my Ph.D., my researches primarily focused on the perception in autonomous driving vehicles, encompassing areas such as stereo vision, 3D mapping, moving-object detection and map repair.

My detailed resume/CV is here (last updated on July 2025).

🔥 News

- 2025.02: 🎉 One co-authored paper on visual localization under extreme viewpoint changes got accepted by CVPR 2025! Congratulations to Yunxuan and other authors.

- 2024.10: 🎉 One co-authored paper on visual question answering got accepeted by EMNLP 2024! Congratulations to Xiaoying and other authors.

- 2024.05: The proposed dataset to evaluate active recognition has been made publicly available! Please refer to the page for details.

- 2024.04: 🎉 I have successfully defended my Ph.D.! I would like to extend my gratitude to my committee: Prof. Ying Wu, Prof. Qi Zhu, and Prof. Thrasos N. Pappas. And I will join Amazon as an Applied Scientist this summer!

- 2024.02: 🎉 Two papers on active recognition for embodied agents have been accepted by CVPR 2024! Thanks to all my collaborators!

- 2023.07: 🎉 Our paper on uncertainty estimation has been accepted to ICCV 2023! Appreciation goes out to all advisors: Dr. Bo Liu, Dr. Haoxiang Li, Prof. Ying Wu, and Prof. Gang Hua!

📖 Educations

- 2019 - 2024, M.S., Ph.D. in Electrical Engineering, advised by Prof. Ying Wu, Northwestern University.

- 2013 - 2019, B.E., M.S. in Computer Science, advised by Prof. Long Chen, Sun Yat-sen University.

📝 Publications

Active Open-Vocabulary Recognition: Let Intelligent Moving Mitigate CLIP Limitations

Lei Fan, Jianxiong Zhou, Xiaoying Xing, Ying Wu

Poster | Project (coming soon) | Video

- Investigate CLIP’s limitations in embodied perception scenarios, emphasizing diverse viewpoints and occlusion degrees.

- Propose an active agent to mitigate CLIP’s limitations, aiming for active open-vocabulary recognition.

Evidential Active Recognition: Intelligent and Prudent Open-World Embodied Perception

Lei Fan, Mingfu Liang, Yunxuan Li, Gang Hua, Ying Wu

Supplementary | Poster | Dataset | Project (coming soon) | Video

- Handling unexpected visual inputs for embodied agent’s training and testing in open environments.

- Collect a dataset for evaluating active recognition agents. Each testing sample is accompanied with a recognition difficulty level.

- Applying evidential deep learning and evidence combination for frame-wise information fusion, mitigating unexpected image interference.

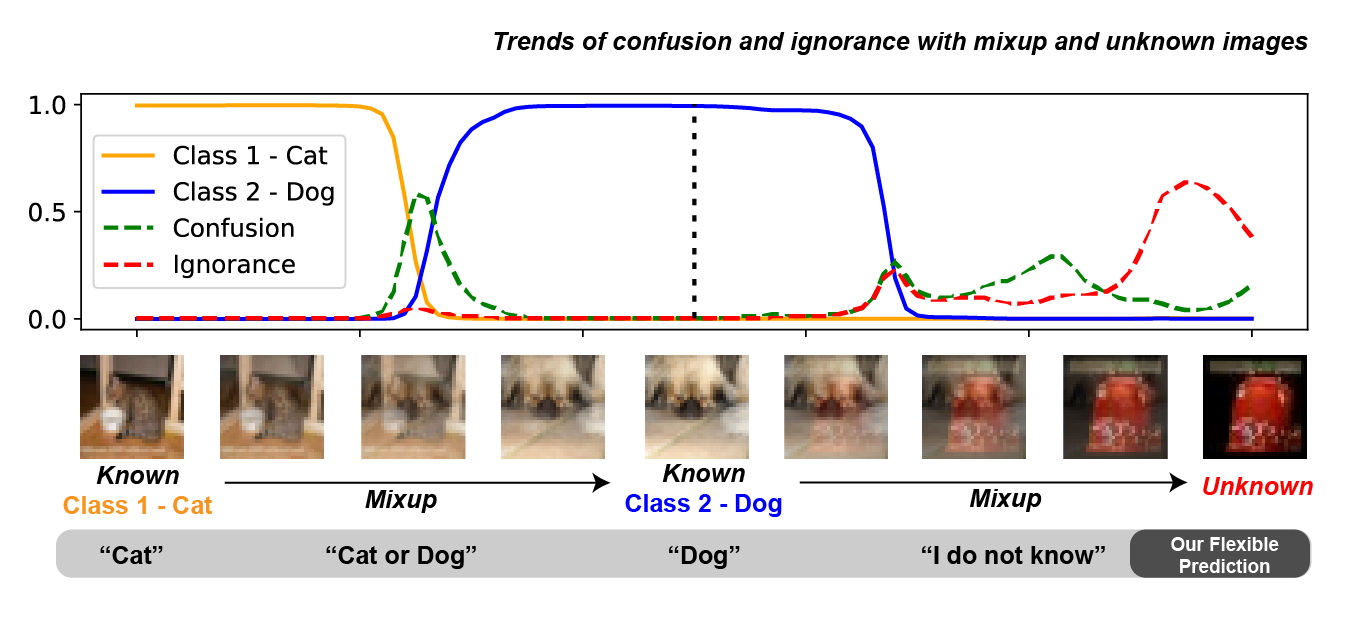

Flexible Visual Recognition by Evidential Modeling of Confusion and Ignorance

Lei Fan, Bo Liu, Haoxiang Li, Ying Wu, Gang Hua

Supplementary | Poster | Project | Code

- Modeling both confusion and ignorance with hyper-opinions.

- Proposing a hierarchical structure with binary plausible functions to handle the challenge of 2^K predictions.

- Experiments with synthetic data, flexible visual recognition, and open-set detection validate our approach.

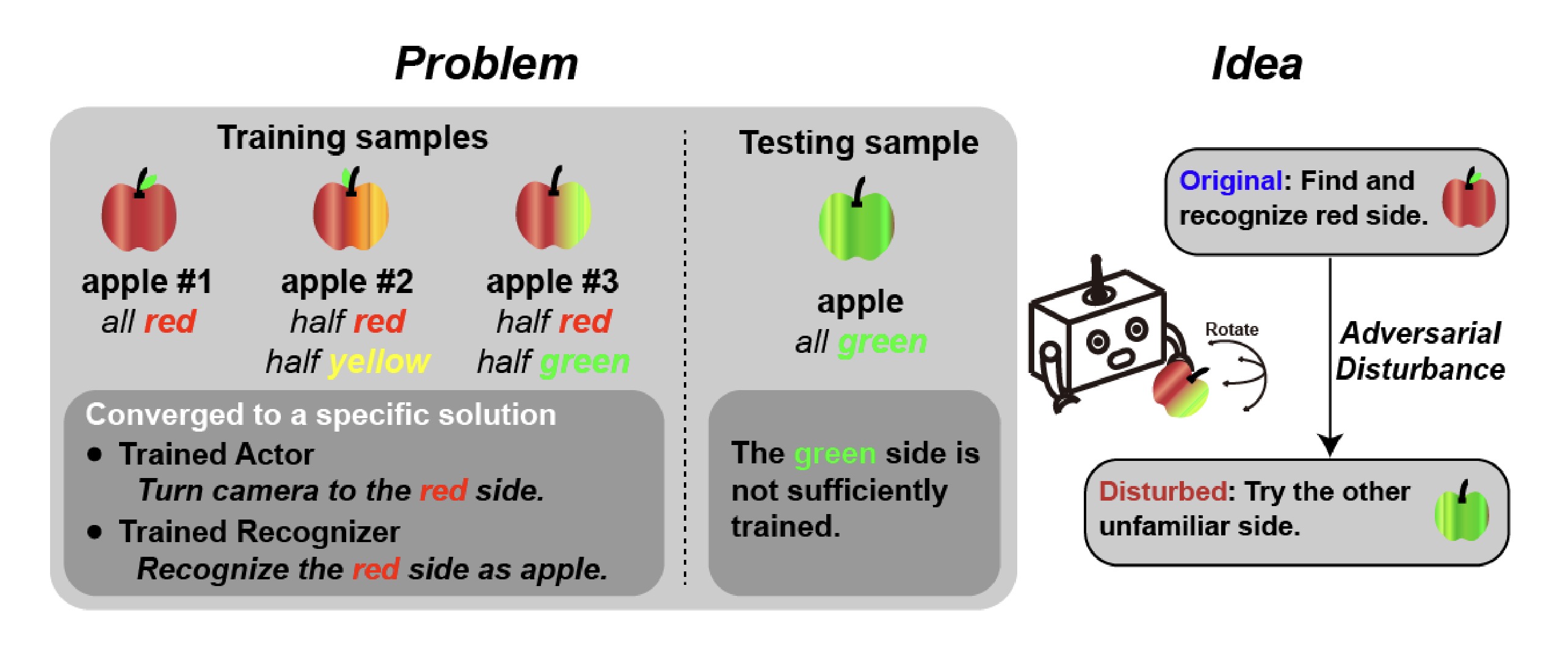

Avoiding Lingering in Learning Active Recognition by Adversarial Disturbance

Lei Fan, Ying Wu

- Lingering: The joint learning process could lead to unintended solutions, like a collapsed policy that only visits views that the recognizer is already sufficiently trained to obtain rewards.

- Our approach integrates another adversarial policy to disturb the recognition agent during training, forming a competing game to promote active explorations and avoid lingering.

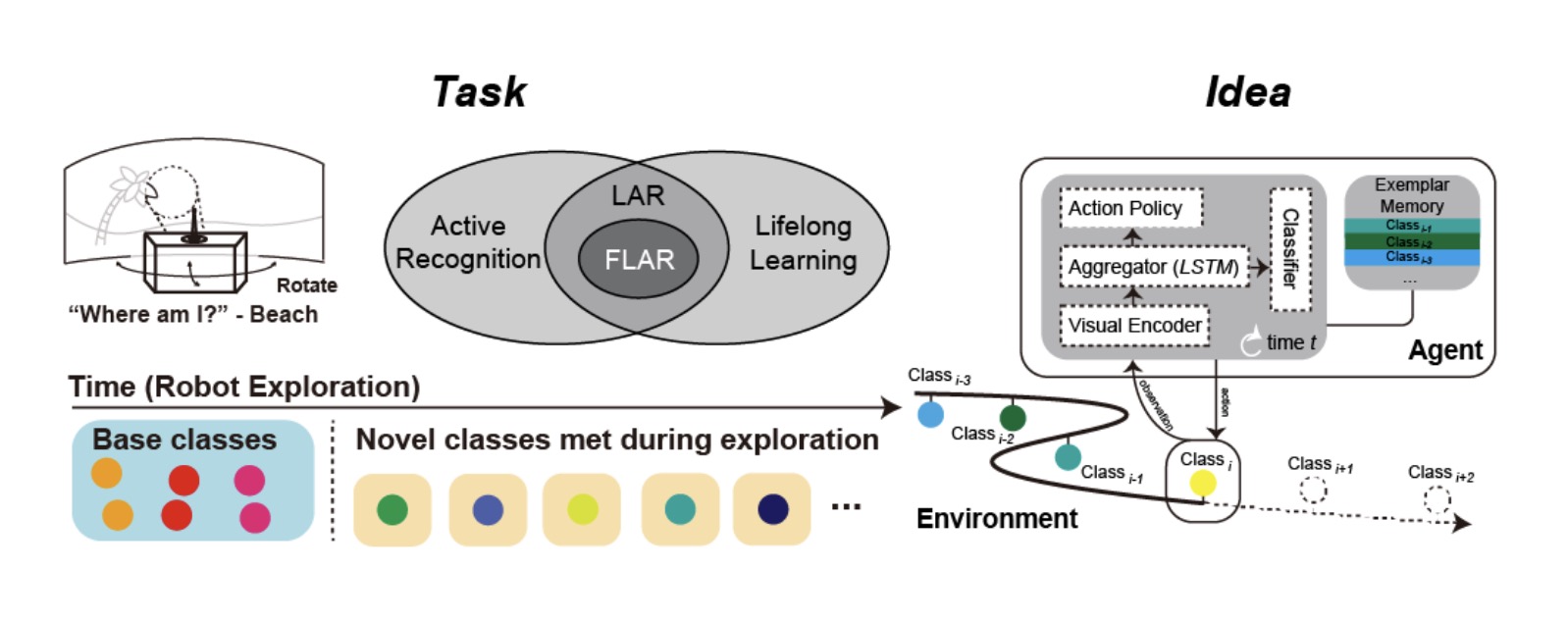

FLAR: A Unified Prototype Framework for Few-sample Lifelong Active Recognition

Lei Fan, Peixi Xiong, Wei Wei, Ying Wu

- The active recognition agent needs to incrementally learn new classes with limited data during exploration.

- Our approach integrates prototypes, a robust representation for limited training samples, into a reinforcement learning solution, which motivates the agent to move towards views resulting in more discriminative features.

-

GPVK-VL: Geometry-Preserving Virtual Keyframes for Visual Localization under Large Viewpoint Changes, Yunxuan Li, Lei Fan, Xiaoying Xing, Jianxiong Zhou and Ying Wu, IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2025.

-

Learning to Ask Denotative and Connotative Questions for Knowledge-based VQA, Xiaoying Xing, Peixi Xiong, Lei Fan, Yunxuan Li and Ying Wu, Findings of the Association for Computational Linguistics: (EMNLP Findings), 2024.

-

Unsupervised Depth Completion and Denoising for RGB-D Sensors, Lei Fan, Yunxuan Li, Chen Jiang, Ying Wu, IEEE International Conference on Robotics and Automation (ICRA), 2022.

-

SemaSuperpixel: A Multi-channel Probability-driven Superpixel Segmentation Method, Xuehui Wang, Qingyun Zhao, Lei Fan, Yuzhi Zhao, Tiantian Wang, Qiong Yan, Long Chen, IEEE International Conference on Image Processing (ICIP), 2021.

-

Lightweight Single-Image Super-Resolution Network with Attentive Auxiliary Feature Learning, Xuehui Wang, Qing Wang, Yuzhi Zhao, Junchi Yan, Lei Fan, and Long Chen, Asian Conference on Computer Vision (ACCV), 2020.

-

Toward the Ghosting Phenomenon in a Stereo-Based Map With a Collaborative RGB-D Repair, Jiasong Zhu, Lei Fan, Wei Tian, Long Chen, Dongpu Cao, and Fei-Yue Wang, IEEE Transactions on Intelligent Transportation Systems (Tran-ITS), 2020.

-

Monocular Outdoor Semantic Mapping with a Multi-task Network, Yucai Bai, Lei Fan, Ziyu Pan, and Long Chen, IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2019.

-

Collaborative 3D Completion of Color and Depth in a Specified Area with Superpixels, Lei Fan, Long Chen, Chaoqiang Zhang, Wei Tian, and Dongpu Cao, IEEE Transactions on Industrial Electronics (TIE), 2018.

-

Planecell: Representing Structural Space with Plane Elements, Lei Fan, Long Chen, Kai Huang and Dongpu Cao, Best Student Paper by IEEE Intelligent Vehicles Symposium (IV), 2018.

-

A Full Density Stereo Matching System Based on the Combination of CNNs and Slanted-planes, Long Chen, Lei Fan, Jianda Chen, Dongpu Cao, and Feiyue Wang, IEEE Transactions on Systems, Man, and Cybernetics: Systems (TSMCS), 2017.

-

Let the Robot Tell: Describe Car Image with Natural Language via LSTM, Long Chen, Yuhang He, and Lei Fan, Pattern Recognition Letters (PRL), 2017.

-

Moving-Object Detection from Consecutive Stereo Pairs using Slanted Plane Smoothing, Long Chen, Lei Fan, Guodong Xie, Kai Huang, and Andreas Nuchter, IEEE Transactions on Intelligent Transportation Systems (Tran-ITS), 2017.

-

RGB-T SLAM: A Flexible SLAM Framework by Combining Appearance and Thermal Information, Long Chen, Libo Sun, Teng Yang, Lei Fan, Kai Huang, and Zhe Xuanyuan, IEEE International Conference on Robotics and Automation (ICRA), 2017.

💻 Internships

- 2023.06 - 2023.09, Applied Scientist Intern, Amazon Robotics, Seattle, US.

- Topic: Surface normal estimation and stability analysis.

- Advisors: Dr. Shantanu Thaker, Dr. Sisir Karumanchi. - 2022.06 - 2022.09, Research Intern, Wormpex AI Research, Bellevue, US.

- Topic: Uncertainty quantification for deep visual recognition.

- Advisors: Dr. Bo Liu, Dr. Haoxiang Li, and Dr. Gang Hua. - 2020.06 - 2020.09, Research Intern, Yosion Analytics, Chicago, US.

- Topic: Autonomous forklift in a human-machine co-working environment. - 2016.06 - 2016.09, Visual Engineer Intern, DJI, Shenzhen, China.

- Topic: Stereo matching using the fish-eye cameras on drones.

🎖 Honors and Awards

- 2019.09 Northwestern University Murphy Fellowship.

- 2018.06 Best Student Paper, IEEE Intelligent Vehicle Symposium.

- 2019.09 National Merit Scholarship, China